With big bucks to be made online, and google continuing to be the most important key to unlocking them, this article takes a look at how search engines have evolved over the past 2 decades and how SEOs have battled to rank their websites in those coveted top positions, both ethically and otherwise.

The Early Days Of Search

Finding a website in 1991 was pretty straight forward… Why? Well, because there was only 1! Tim Berners-Lee decreed ‘let there be websites’ and launched the world wide web.

By the end of 1997, finding your favourite table tennis bats store was starting to be a little more complicated. There were now 1,681,868 websites and if you were a business owner (selling table tennis bats or otherwise) getting your site to the top of the listings was becoming increasingly important, as the media reported success story after success story during the height of the first .com boom.

People were beginning to use search engines to find the content they were looking for and in the days BG (before google) there were several big players vying to deliver search results to you:-

- Yahoo

- Lycos

- Excite

- Altavista

- MSN

So how did these early search engines rank content?

Well, in those days it was all about keywords; specifically using the keywords you wanted to rank for as much as you could in as many places as possible on the page – what would quickly became known as keyword stuffing.

There was no concept of relevance back then; you could pretty much use whatever keywords you wanted (whether related to your site or not) and you would start to rank for them. Spam techniques such as hidden text (i.e. black text on black background) were rife.

There was also a strong weight placed on meta tags, including the now pretty much pointless meta keywords tag (I like YOASTS ‘no idea why you would use this’). Our table tennis bats site would probably have something like the following in its head section back then: –

<title>Table tennis bats, table tennis, bats for table tennis</title> – forget the name of the company!

<meta name=”description”>Buy our table tennis bats and bats for table tennis. The best table tennis bats on the internet.</meta>

<meta name=”keywords”>table tennis bats, red table tennis bats, green table tennis bats, big bats, small bats, pink table tennis bats with polkadots, buy table tennis bats, shop for bats….</meta> – no real limit then either, so this would have gone on and on.

In the body text you would invariably have the keywords from the meta keywords tag repeated in a paragraph. Just a list of keywords, no attempt to form proper sentences or proper english.

And there was a good chance your website would rank well!

So, as you can imagine, things were getting messy, with the early SEOs cottoning on to this and everyone doing the same thing. Competitor ranking well? Just copy his keywords and boom, you would leap up the search results. Relevance was becoming an issue and the search results were quickly filling up with spam. It was time for the search engines to evolve.

Google Enters The Market (1998)

It was clear that the on page model for ranking search results was not working and was easily manipulated. Step forward 2 Stanford University graduates – Larry Page and Sergey Brin – and the rebranding of their ‘backrub’ search engine as google.

The origins of google are well documented, but briefly…

Page and Brin recognised that you could not solely trust the information provided to you on a web page and conceptualised a way of using the academic ‘citation’ model to rank web pages.

Crudely, every page on the web was born with a certain amount of pagerank. Each time another page linked to (cited) another web page, it passed a proportion of this weight over to the target page. So, effectively pages with lots of links pointing to them would accrue pagerank and be capable of ranking higher in google’s search results. Oh, and a link to your page from a site with lots of websites pointing to it (giving it a high pagerank) was great for your site, but lets move on and if you want to find out more here is the full spec of the original algorithm.

The other factor which was weighted into the algorithm was anchor text, i.e. the text used to link to the target webpage. So, if our table tennis bats site had lots of links pointing to it with the words table tennis bats (or variants of the same) in the anchor text then chances are we would be near the top of the search results and shifting our lovely bats by the lorryload. This applied to internal links (links within your site) too.

To begin with, this worked very well as sites which had real value and strong relationships had naturally attracted a lot of links over their lifetime. The relevance of google’s search results quickly generated huge buzz and by the end of the decade, google was becoming the dominant player in the search results market with the other search engines franticly playing catchup and attempting to implement their own link based algorithms.

Link Spam (1999 – 2001)

So, the search results were starting to get more relevant, but as before the SEOs of the time quickly cottoned on to what was going on and link exchanging became the name of the game.

Websites the world over added a ‘links’ page and started blasting out emails asking to exchange links. Typical links pages on the websites of companies who had utilised the services of aggressive SEOs would contain hundreds of links to completely off topic and unrelated websites, which would in turn be linking back from their links pages of similar off topic and irrelevant resources.

Link hunting was in overdrive and it didn’t matter where you got the links from – to survive in the early google world you had to gather as many links as you could.

Clearly this went against the original purposes of pagerank and the citation model of ranking websites. These were not votes, they were just links for the purpose of boosting the sites search engine rankings.

And worse was to come…

Link Farming (2001 – 2003)

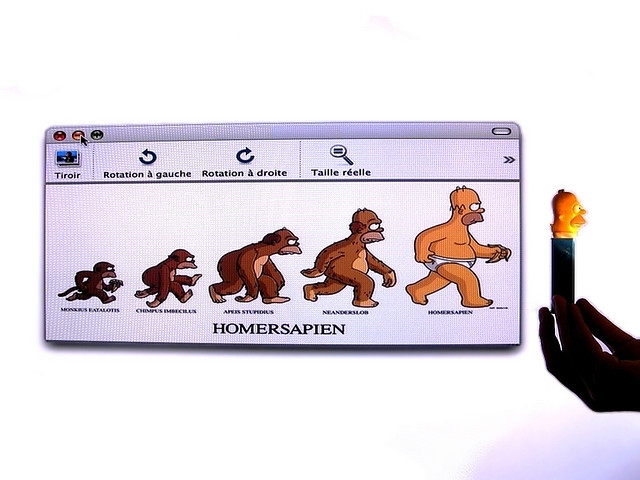

Just as the early humans became bored by the hours of searching and scavanging for their food, our SEOs soon realised that they could easily manipulate google’s results (by this point it was all about google) by farming links.

A link farm was effectively a website (or facilitator) of link exchange on a huge scale with thousands of websites all linking back and forward with each other and increasing their pagerank. This was link spam on an industrial scale and as the agricultural revolution swept the web, relevance was becoming a real issue again.

So, around 2003/2004 google started fighting back; firstly by devaluing reciprocal linking and then by deindexing known link farms and spammers.

If you wanted to achieve those top results what was now required was one way links to your website. Fine if you were a university, publishing amazing research papers, but if you were the SEO company for our table tennis bats site, how to get them?

The Industrial Revolution (2002 – 2005)

At this point, blogging was really coming into its own and the web was changing from a static to a more social and interactive experience. Platforms such as wordpress and blogger made it easy for writers to set up their own sites, without the requirement for technical knowledge and thousands of new blogs were springing up every day.

Comments were the lifeblood of blogs and for our unscrupulous SEOs this provided the perfect opportunity to blast their link everywhere with spam, spam and more spam!

Software was used (and continues to be used) to seek out blogs and post thousands of nonsense comments with the sole purpose of gaining links, either in the body of the comment or through the keyword stuffed ‘name’ field which, provided an opportunity to link back to the commenter’s own site.

When browsing your favourite blogs you might have seen lots of comments that looked a bit like: –

Name: Table Tennis Bats

Comment: Nice blog!

Which wasn’t really adding much to the discussion!

This automation was blasting blogs with spam and something had to be done, so in 2005 google’s Matt Cutts (who was now head of web spam) and blogger’s Jason Shellen proposed a new attribute be added to links from blog comments – the now infamous rel=”nofollow”. Google amended their algorithm so that links that used this attribute would no longer pass pagerank and count towards a web site’s search engine rankings.

Once again, Companies which had employed dodgy SEOs to boost their ranking took a tumble down the results.

Paid Links (2004 – 2006)

Our search engines were now becoming pretty advanced and reciprocal linking and link farms were on their way out. But, just like before, as google combated one way in which their pagerank algorithm was being manipulated, SEOs found another way round it and a huge new market was created in link buying and selling.

Link brokers such as text link ads were acting as middle men for websites desperate to buy links from the huge wave of blogs and web 2.0 sites which were exploding on the internet. Companies would rent links on a post or home page, with pricing based on the (toolbar) pagerank of the linking blog.

It was not uncommon in those days to see a dozen or so sidebar links on a popular blog linking out to completely unrelated sites with the anchor text the target site wanted to rank for.

Our table tennis bats company might be renting links from dozens of blogs at anything from $5 a month to $50 a month depending on pagerank and again they would go zooming up the search results. So, once again, things were falling far from google’s utopian view of the web and it was time for the google to lay down the law.

Google Begins To Penalise Paid Links (2007 – 2008)

In 2007, google began to wage war on paid links. They had already launched the now widely used nofollow attribute in 2005, which could be added to a link to prevent it from passing pagerank and in April 2007, decreed that all links which had been paid for must use this tag, otherwise the website selling the links would be penalised.

Overnight thousands of websites which had been selling links had their toolbar pagerank devalued and lost their search rankings, including many high profile businesses.

Not only that, google encouraged webmasters to actively report websites they suspected of buying (or selling) links. This blog post from Matt Cutts, head of google’s webspam team explained exactly how it should be done.

Our hypothetical table tennis bats site would have dropped down the rankings as the sites it had been renting links from were penalised.

Pagerank Sculpting (2007 – 2009)

As google waged war against artificial link building, and pagerank dwindled, SEOs started looking back at things they could do on site to increase the ranking power of their key pages. This was the era of the SEO artists known as pagerank sculptors.

The theory was that if your website has a certain amount of pagerank to pass around your site, then you could creatively use the nofollow attribute to control the flow of pagerank around your site. Certainly you wouldn’t want to be passing pagerank to pages like your privacy policy, returns etc. The theory went…

Let’s say a website homepage has 100 points (or ranking juice, whatever) of pagerank to pass to all links

link to 100 pages – each page will only receive 1 point

However, add no follow attribute to 90 of the links and suddenly our 10 key pages get 10 points each

And, for a while, it did actually work. I know because I used the tactic!

That was until google changed the algorithm in 2009, so that the total pagerank was divided between all links, regardless of whether they had the nofollow tag or not.

So now, the same set up would pass just 1 point to our key pages and the other 90 points of pagerank would be lost forever 🙁

And with that pagerank scuplting was dead.

Combating Duplicate Content (2004 – 2011)

We’re jumping back a bit here, but this is a part of the evolution of search engines and SEO, which started back in 2004 and really hit the fan in 2011.

I have already discussed link exchanging and some of the black hat methods of SEO, such as link farms etc, but during the middle of the decade a popular way of building backlinks was the use of article marketing, which was seen as a clean way of link building. SEOs would write an article related to their topic and publish it to an article directory, such as ezine articles. The article could then be used by other bloggers/websites with an attribution link back to the website who had provided the article.

The website would effectively gain links from the original article on the article directory site + any other sites which syndicated the content. It was common practice to use keyword rich anchor text in the backlinks.

Unfortunately, this started to be abused and low quality articles were being pumped out en mass for the sole purpose of gaining links. The search results were also filling up with duplicate versions of the same article, which were clogging up results.

So, the first step google took to counter this was around 2004, when they started to filter out duplicate content. Effectively, only the original source of the content would rank (or sometimes the one with the highest pagerank). This was a logical step as in google’s eyes (quite correctly) as once it had provided access to the information there was no requirement to list the same content again.

Collateral damage was caused to a great deal of ecommerce sites at the time of these updates, as many of them had just copied the manufacturers product description (more on this topic and why it is a big no no here), thereby triggering the duplicate content filter and pushing themselves out of the search results. Anyone who is reading this article and was working on SEO at the time will recall the hell that was google’s supplemental results.

As original content was now required to rank a page, the horrible practice of article spinning become commonplace. This involved writing an article once and then using an algorithm to ‘spin’ it by altering words to create, what it was hoped google would see as a unique piece of content. The other practice was to take 3 or 4 articles and mix them together. If you have ever encountered a spun article you will recognise it immediately as it makes no sense whatsoever and is created for the sole purpose of achieving a link and bypassing duplicate content filters. Whilst an article about red table tennis bats might not be riveting reading at the best of times, trying to read a spun one would probably cause you to take your own life.

the first Panda updateSpun articles started to litter the web and once again google’s results were compromised. Their nuclear bomb response happened in February 2011 with the rolling out of .

Search Today (2011 – 2014)

There are 2 animals which most webmasters will avoid on a visit to the zoo – the panda and the penguin. For the last couple of years these 2 words (the names of respective google algorithm updates) have struck fear into the hearts of webmasters the world over. I will go into what these updates were in more detail in future articles, but here is a brief summary: –

Panda (2011) – targetted low quality sites with thin content, scraped text etc. Site wide penalties were introduced, instead of just the page being penalised

Penguin (2012) – targetted spam SEO tactics and unnatural linking.

These 2 updates have been the topic of great debate in the SEO community. On the one hand they filtered out lots of spam sites and scrapers (sites which had stolen other people’s content), but many reputable sites which had not recognised the importance of unique content were also affected. Rankings were decimated with webmasters reporting drops of up to 90% in search engine traffic.

The Penguin update flipped traditional ideas of link building on their head. Until that point the general idea would be to use your keywords as anchor text wherever possible, however, after Penguin this is seen as unnatural linking and will probably trigger a penalty. All those links we had build with the exact anchor text ‘table tennis bats’ would probably now see our site drop like a stone.

Diversity in anchor text is now seen as key with keywords making up a small percentage of links and more focus on branded terms (the name of the site) and generic text ‘click here’, ‘this site’ etc, which until this point had been avoided by SEOs and thought of as wasted opportunities. I believe this is a good thing and goes back to the citation model of linking, but like I said, I will go into more detail in a separate article!

How To Rank Your Website In 2014

For a detailed analysis of SEO in 2014, then check out my 13,000 word guide, but if you want it in a nutshell…

So, how does a website succeed today? Well, the good news is that SEO is becoming much harder to game and high quality businesses and blogs are rising to the top with spammers on their way down. This trend should continue as google finally seems to be winning the war on spam and updates to the Panda and Penguin algorithms rolling out regularly which filter out more and more low quality sites. I will be writing in detail about how to succeed with your website in 2013, so look out for lots of articles on the topic and subscribe for regular updates, but to summarise here are the key factors: –

- High Quality Unique Content With Regular Updates

- Strong Branding And Identity

- Social Networking (and proof)

- Positioning Yourself As An Expert In Your Field

- Fulfilling A Genuine Need Of Your Visitors

- Building Relationships With Other Websites

- High Quality, White Hat Links From Trusted Resources (quality over quantity)

- Video Marketing

- Good Site Structure, Hierarchy And Internal Linking

So, that’s a brief summary of the evolution of SEO and search engines and brings us right up to the present day. I will keep this page updated with future changes, which are sure to be just around the corner and hopefully will continue to improve the relevance of results. The war on spam continues, but the tide does appear to have turned.

If you enjoyed reading this article then please share it (wherever you can!) and feel free to leave a comment below with any other points or questions. If you have a website and would like help with preparing a successful content marketing strategy then please contact us.

Recommended Reading

Google algorithm change updates (moz.com) – http://moz.com/google-algorithm-change